Divide and conquer. How to manage multiple data streams

We recently completed the development of a bespoke secure portal for a client and, happily, off the back of that successful piece of work, we’ve been given the go-ahead to proceed with an interesting second-stage project. This new project aims to automate and improve how data moves around the client’s business (an area we’re experienced in) by wrangling multiple data streams.

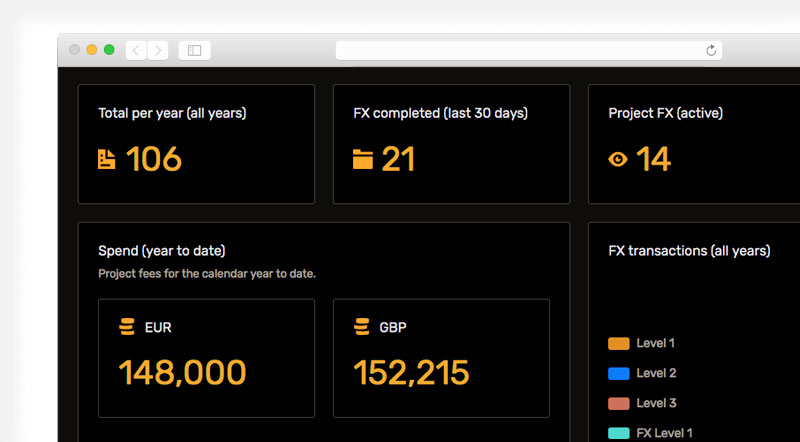

Currently, staff have to manually fetch information from these data streams and identify information of value to their various clients, however, this isn’t the most scalable of solutions (it also seems rather tedious), and the service is growing, fast. In order to automate this we need to continually pull data, from multiple sources (some of them awkward legacy systems), and filter items of interest that can be flagged for a human to review in their new secure portal.

These different data streams all provide information in different ways, one is a simple REST API with JSON data, and another requires us to configure filters via one API, and pull in an unpredictable collection of data via another API service (this will send us a LOT of data). Each data source needs its data to be collected, its filters to be managed and its data processed and queued for review in a way that is quick and easy for a human to manage.

Divide and conquer

This is undeniably a dauntingly complex project to implement, how can we do all that and also understand and fix problems when it breaks? Certainly not with a monolithic application. The solution is something that was identified back in the 70s in the Unix Philosophy and was more recently better defined as the 17 Unix Rules. You break each part of the project down into small components that do one thing and do it well. This is sometimes called a microservices approach.

In our solution, we broke each requirement down and solved it with its own isolated component, each of which could be run independently of the other. For example, we wrote a Go application with the sole job of making sure that the “Firehose” API’s filters are correct. We wrote another that would only collect the raw data from the Firehose API and put it into a message queue. Another component translates the Firehose data into our common data format and so forth.

This bespoke build approach to services (rather than relying on ready-built services) means that we can clearly design each feature to the client’s exact specifications and independently deploy them into a cooperative collection of services. Good use of message queues to decouple each component means that if one goes down the rest need not grind to a halt.

Be like Starbucks

Last year I read an excellent blog post by Weronika Łabaj on the lessons that software developers can learn from Starbucks, the popular coffee chain.

With the advent of new technologies* such as Docker and the various schedulers that support it, we can treat our servers like Starbucks employees. Each component can be individually scaled in response to demand. Got more data to process than you have to collect? That’s fine, take some system resources from one and use them for the other.

*Yes, I know, Mainframes had it decades ago.